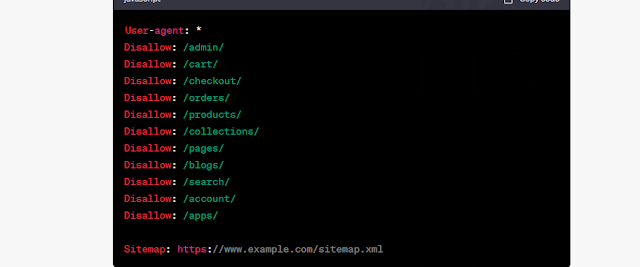

In Shopify, the robots.txt file is used to communicate with search engine crawlers and specify which parts of your website should be crawled and indexed by search engines. The robots.txt file follows a specific format. Here's an example of how it can be structured for a Shopify website:

Let's break down the elements of this example:

User-agent: * specifies that the rules apply to all search engine crawlers.

Disallow: is used to indicate the directories or pages that should not be crawled. In this example, /admin/, /cart/, /checkout/, and so on are specified to be disallowed.

Sitemap: specifies the location of your sitemap file. This line is optional but recommended, as it helps search engines understand the structure of your website.

You can customize the robots.txt file for your specific needs by adding or removing Disallow directives as necessary. It's important to note that the robots.txt file is publicly accessible, so be cautious when modifying it to avoid accidentally blocking important parts of your website from search engine crawlers.

0 Comments